Microsoft Fabric Migration from Azure Synapse: What You Should Know

A Microsoft Fabric migration from Azure Synapse can be a great way for organizations to enhance their data analytics programs, but there are some considerations to take before doing so. We’ll highlight what you need to know to make this move a success.

Microsoft Fabric is an all-in-one SaaS analytics solution for enterprises that offers a comprehensive suite of services including Data Factory, Data Engineering, Data Warehousing, Data Science, Real-Time Intelligence, and Power BI. As organizations look to enhance their data analytics capabilities, migrating from Azure Synapse to Microsoft Fabric presents a viable path to leverage new features and improve performance. This guide explores the various routes and steps involved in this migration, helping organizations transition smoothly based on their current architecture and state of their Synapse warehouse.

Migration Paths

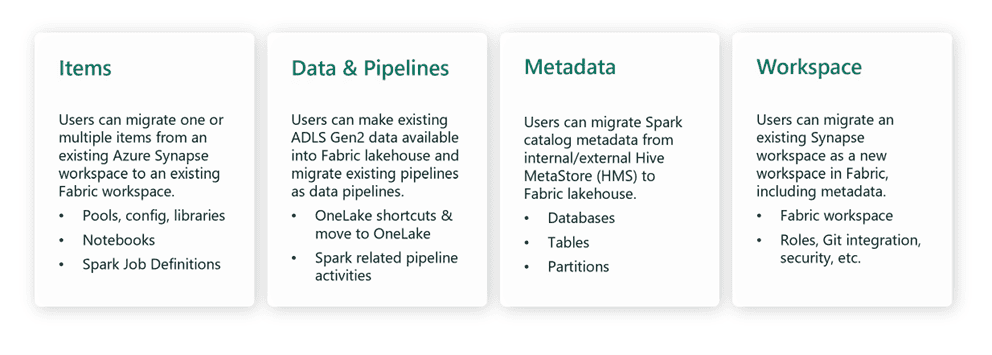

When migrating from Azure Synapse to Microsoft Fabric, the paths vary depending on the starting point, which can be categorized as follows:

Migration from Azure Synapse Dedicated SQL Pool to Fabric

Let’s explore the first option of migrating from an Azure Synapse dedicated SQL pool to Fabric.

As Fabric comes with a new set of capabilities and limitations different from Synapse, we need to make sure that the current architecture, schema, code, and data are compatible with Fabric. At the same time, we also need to consider the amount of refactoring work that involves redesign of the incompatible items and designs needed to utilize the new features offered by Fabric. We should be cognizant of the fact that the new solution takes full advantage of the high query performance potential of Fabric and understand the fact that Fabric might need new design patterns different from the traditional design patterns.

Although, we can revisit most of the items listed above after migration, taking a deep dive before migration will save significant time and resources post-migration.

Microsoft recommends the following steps for migration.

At this point we can go for either “Lift and Shift ” or Modernize.

Lift and Shift vs. Modernize

For stable and optimized current architectures, a “Lift and Shift” approach with minor adjustments may suffice. However, legacy data warehouses may require re-engineering to achieve optimal performance in Fabric.

We need to account for the design differences between Synapse and Fabric in the following areas:

Migration Methods for Schema, Code, and Data

Microsoft recommends various methods for migrating schema, code, and data, as detailed below:

| Option | What It Does | Skill/Preference | Scenario |

|---|---|---|---|

| Data Factory | Schema (DDL) conversion Data extract Data ingestion | ADF/Pipeline | Simplified all in one schema (DDL) and data migration. Recommended for dimension tables. |

| Data Factory with partition | Schema (DDL) conversion Data extract Data ingestion | ADF/Pipeline | Using partitioning options to increase read/write parallelism providing 10x throughout vs option 1, recommended for fact tables. |

| Data Factory with accelerated code | Schema (DDL) conversion | ADF/Pipeline | Convert and migrate the schema (DDL) first, then use CETAS to extract and COPY/Data Factory to ingest data for optimal overall ingestion performance. |

| Stored procedures accelerated code | Schema (DDL) conversion Data extract Code assessment | T-SQL | SQL user using IDE with more granular control over which tasks they want to work on. Use COPY/Data Factory to ingest data. |

| SQL Database Project extension for Azure Data Studio | Schema (DDL) conversion Data extract Code assessment | SQL Project | SQL Database Project for deployment with the integration of option 4. Use COPY of Data Factory to ingest data. |

| Create External Table As Select (CETAS) | Data extract | T-SQL | Cost effective and high-performance data extract into Azure Data Lake Storage (ADLS) Gen2. Use Copy/Data Factory to ingest data. |

| Migrate using dbt | Schema (DDL) conversion database code (DML) conversion | dbt | Existing dbt users can use the dbt Fabric adapter to convert their DDL and DML. You must then migrate data using other options in this table. |

Migration from Azure Synapse Spark to Fabric

Microsoft provides specific steps for migrating from Azure Synapse Spark to Fabric, ensuring a smooth transition and optimal performance in the new environment. The following steps are recommended by Microsoft for migrating from Azure Synapse Spark to Fabric:

Microsoft Fabric Migration is an Art

Migrating from Azure Synapse to Microsoft Fabric involves a detailed assessment and strategic planning to ensure compatibility and leverage new capabilities. By following Microsoft’s recommended steps and methods, organizations can efficiently transition to a more robust analytics platform, enhancing their data operations and achieving greater performance. Whether opting for a “Lift and Shift” approach or a comprehensive modernization, understanding the nuances of both platforms will facilitate a successful migration.

Keep Reading: Microsoft Fabric vs Azure Synapse

Looking for more on data & analytics?

Explore more insights and expertise at smartbridge.com/data

There’s more to explore at Smartbridge.com!

Sign up to be notified when we publish articles, news, videos and more!

Other ways to

follow us: