Hand Gesture Recognition Based on Computer Vision

Smartbridge is continuing our journey toward solving complex business challenges with AI. Here, we evaluate four tools for hand gesture recognition based on computer vision.

In a nutshell, as mentioned in this blog post, Computer Vision Applications for the Modernized Business, Computer Vision enables computers to understand the visual world by analyzing an image or video they see.

Smartbridge worked on a use case where Computer Vision can be applied to benefit a client in the food service, or restaurant, industry. This application would have the ability to interact with a device in a touchless manner using certain hand gestures.

The area of Artificial Intelligence and Computer Vision has evolved significantly in the past 5 years and we have a wide variety of advanced tools available at our hands. This article discusses some of the technologies we evaluated to implement the desired solution.

Option #1: Emgu CV

Emgu CV is a cross-platform .Net wrapper to the OpenCV libraries and allows OpenCV functions to be called from .Net-compatible languages. (OpenCV is an open-source machine learning and computer vision software library that includes several hundreds of computer vision algorithms mainly aimed at real-time computer vision.)

Emgu CV library provides various capabilities to process the image for the detection and identification of specific objects. Some of the capabilities the libraries provide:

In short, Emgu CV provides a set of foundational libraries that can be used for any image processing like how C/C++ provides the foundational libraries for a programmer using which one can build custom applications for their needs.

Option #2: Azure Computer Vision

Azure provides a convenient and cost-effective way to leverage powerful AI services completely through the cloud. Azure Computer Vision is Azure’s cloud service offering used to analyze the content in images and video using AI. For most image classification tasks, this service is going to be your go-to option. It returns accurate results with very little set-up required aside from provisioning the service itself in your Azure instance.

Microsoft provides two approaches to using this service – it can be used either entirely through a REST API exposed by the service, or through a client-side library that “abstracts” the various API operations through a series of callable methods in code. Behind the scenes, both options behave very similarly and are equally as effective at analyzing images.

In either implementation, the image analysis will result in a set of tags that accurately describe the image.

Here’s a sample image to illustrate what you can expect to see using the service:

The Image

The tags (this is just a few of many):

This level of accuracy out-of-the-box can be attributed to the underlying model being trained on millions of images from Azure tenants all over the world, which is the clear benefit of cloud services such as these.

For what most users would need from an image analysis service, this does a great job and has a wonderfully low barrier to entry. But there is a clear limitation here – Azure Computer Vision works better when high-level identification of the image is sufficient and does not require the granularity needed for hand gesture identification, for example.

Option #3: Azure Custom Vision

Right there in the name, you can already tell this service is going to be better suited for a much more specific use case. Like Azure Computer Vision, Azure Custom Vision is an image recognition/classification service, but there’s a key difference – it allows you to train your own model using labels of your choosing.

While it is true that training an accurate AI model is no small feat, with Azure Custom Vision, the machine learning algorithm used to train models can do so with an initial “training set” of less than 100 images.

After feeding your training set of images to the service through a web interface built on top of Azure Custom Vision, a set of tags or classes are then chosen to label the images in the training set before letting the algorithm work its magic. In a matter of mere minutes, the algorithm will crunch your data and output a trained model to consume in your application. And as an added plus, the model can either remain in the cloud or be exported locally and used with a variety of popular Machine Learning frameworks.

The ability to custom define a set of tags to classify the images, allows us to detect small, granular differences in the features of images that we otherwise couldn’t achieve using Azure Computer Vision. Granted, the initial model isn’t intended to be infallible – the idea is that you’d continue to feed the model data, re-train, rinse and repeat – it gives you a solid foundation and remarkably speeds up training a tailor-made AI model for your image classification use case.

Option #4: OpenPose and Emgu CV

Pose Estimation is a Computer Vision technique used to detect the position and orientation of an object. The Pose Estimation capabilities of the OpenPose provide a set of data points that can be connected to describe an individual’s pose using which human pose or hand pose skeleton can be determined. OpenPose provides Real-time multi-person key point detection for the body, face, hands, and foot estimation. A portable version of OpenPose for windows can be used with Emgu CV .Net wrapper libraries to determine the pose estimation.

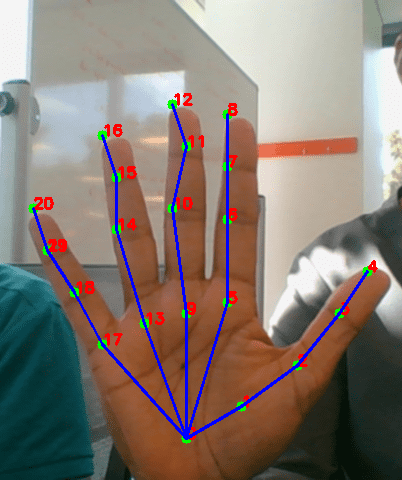

For example, OpenPose’s Hand Pose estimation model can be used to derive the data points from the picture of the hand that is captured from the incoming video. OpenPose returns 21 key data points for the hand pose estimation where each data point represents the area of the hand as shown in the below picture. Moreover, each data point includes the x & y coordinate followed by the probability that represents the detection confidence. By connecting the related data points, the skeleton of the Hand Pose can be derived.

OpenPose works best for the scenarios like detecting key data points from the human body, hand, facial, and foot. The key data points returned as x & y coordinates with the detection confidence can be used for further analysis.

Ultimately….

We opted to move forward using Emgu CV with OpenPose, which provided the estimation model to derive the data points from the hand. To further improve the accuracy of hand gesture recognition in the next phase of work, we will use Pose Confidence Score as one of the data points from the Hand Pose estimation. We noticed that certain hand gestures (for example, when all the fingers of the hand are closed), the data points from the hand pose estimation are not as expected. Using this data point, we can re-estimate the hand poses that are not deemed strong enough.

Hopefully, this was a helpful prelude to your exploration of computer vision to solve complex business problems. Keep in mind, that the possibilities of application are limitless, and new technologies to accomplish these goals are introduced in the market every day. The next time we publish an article about our experience on hand gesture recognition, who knows what new solutions we may feature!

Keep Reading: Computer Vision Applications for the Modernized Business

There’s more to explore at Smartbridge.com!

Sign up to be notified when we publish articles, news, videos and more!

Other ways to

follow us: