Stabilizing the Clouds:

Multi-Cloud Architecture Load Balancing

We live in an age where it seems like everything is in the cloud. However, contrary to popular belief, the cloud is not just a single entity. In today’s landscape, we have multiple cloud options to choose from, which we can base off performance characteristics. Today we’ll discuss multi-cloud architecture load balancing on various platforms.

Benefits of Multi-Cloud Architecture

When analyzing your current cloud architecture and other potential solutions, you might ponder the “why” question. Why would I need to complicate my environment by learning yet another solution, when my current architecture works perfectly fine? As we’ll explore in this article, there are many reasons why you’d consider potentially using another cloud environment.

For starters, if you do your homework, you might find a better price-to-performance ratio that will impact your bottom line. There’s also the more important necessity to ensure you’re using the right tool for the job. Each cloud environment may offer something that works better for the task at hand.

Depending on which region of the world you are targeting, you might also gain benefits in the form of availability zones. Last but not least, being able to switch between multiple cloud platforms will protect you from the highest levels of service failure and DDoS attacks.

Multi-Cloud Architecture Load Balancing

Now that we understand the high-level benefits of multi-cloud architecture, how do we tie it all together? This was a question we asked ourselves in our most recent Smartbridge hackathon.

Our team was already familiar with the offerings of AWS and Azure, however we had minimal experience with Google Cloud. Were we prepared to make the “switch and fail” across various cloud platforms? We tasked ourselves with building a fail-over scenario to analyze the multi-cloud architecture load balancing capabilities of each one.

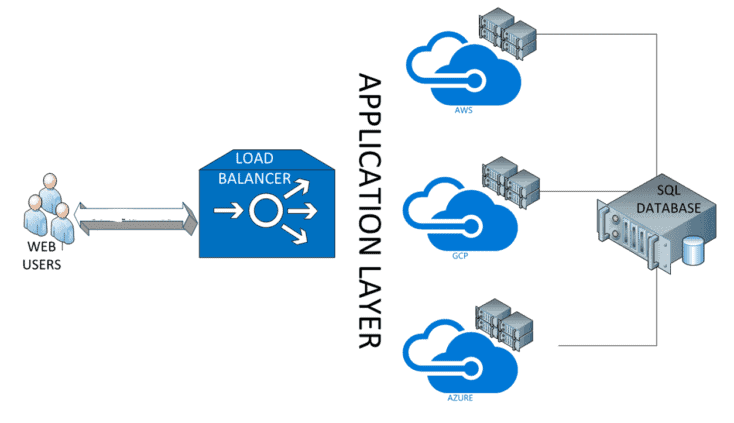

At the start of the day, our team decided to model the fail-safe scenario. We did this by taking a pre-built application and preparing it for deployment across AWS, Azure, and GCP. Our desired solution was to have a load balancer directing traffic to the target endpoints hosted on the various clouds.

On the first go, we decided to take a familiar approach by using an AWS load balancer. We quickly discovered this solution wasn’t going to work, as the AWS balancer only directed traffic to servers in the same private network. What we needed was an agnostic load balancer capable of balancing efficiently between each cloud platform.

After a few more hours of research (and pizza to refuel our bodies), we found some great platform agnostic load balancers. These included Nginx, Envoy, Traefik, and HA Proxy. Each of these applications offer great features that met our needs:

HAProxy Load Balancing Results

After reviewing each option, we decided to go with HAProxy. The words “free” and “open source” meant that this solutions was even more enticing than the others. After a brief installation process, we configured HAProxy to load balance between 3 application servers hosting our web application.

We hosted each server on one of the 3 major cloud providers – Google Cloud Platform, Amazon Web Services, and Azure. Two of these servers were our active pair, and split the incoming load for serving client requests. The third server sat in dormant mode, waiting to be activated if one of the other servers went down.

We then simulated the web traffic coming into our load balancer, and printed out the screen of which cloud platform we were hitting. With each incoming request, we saw them bouncing between our active pairs on AWS and Azure. Then with a click of a button, we shut down our AWS service completely. As we clicked refresh, we were presented with the dreaded 404 request error. What happened? Was this expected? Is our load balancer failing to engage?

We held our breath and tried to reload again. This time however, the page loaded with results coming from our Google Cloud platform as expected! It seemed we caught our HAProxy server just milliseconds before the switch automatically failed over. Our solution was performing as desired, which was failing over to another cloud platform when necessary. As we restored AWS, we saw our requests again routed back to the original pair, and our GCP instance returned.

Examining the Results

In our hackathon session, we learned that there are many things to consider when moving between platforms, including possibly having a platform agnostic load balancer. In the long run, the move to a multi-cloud implementation has various advantages.

There are multiple reasons why so many modern companies are choosing to go with the multi-cloud route. However, it is crucial to carefully plan your cloud strategy first. With multi-cloud architecture load balancing, this implementation comes with added complexities. If done right however, it can yield many benefits.

Looking for More on Cloud Solutions?

Explore more insights and expertise at smartbridge.com/modernization

There’s more to explore at Smartbridge.com!

Sign up to be notified when we publish articles, news, videos and more!

Other ways to

follow us: