Loading Data into Snowflake from a Weather Service API

Having an understanding of how weather can impact sales is important to the restaurant business. This enables them to manage stock purchases and staffing based on the inclement weather conditions. We decided to tackle this problem as part of a recent hackathon event.

Smartbridge works with a number of companies in the food service industry and has collected a variety of data on operations through our applications and accelerators. Our goal with this exercise was to read weather data from a REST API and to explore the effect on sales. We used the ‘Precipitation Intensity’ factor from the forecast data and developed additional metrics to correlate sales with precipitation. This could help clients mitigate the impact of future inclement weather based on the forecast and historical behavior.

Hackathons are a cornerstone to our innovative mindset at Smartbridge. See how they can impact your organization.

Data Source

The key component to building this model was weather forecast data. We looked at API’s from various online weather data providers, like weather.gov and a few others. We went with Dark Sky because it had a number of features in which we were interested.

Approach

We worked with a combination of Microsoft Azure Data Factory (ADF) and the Snowflake data warehouse (both of which are Smartbridge partners) to build this solution.

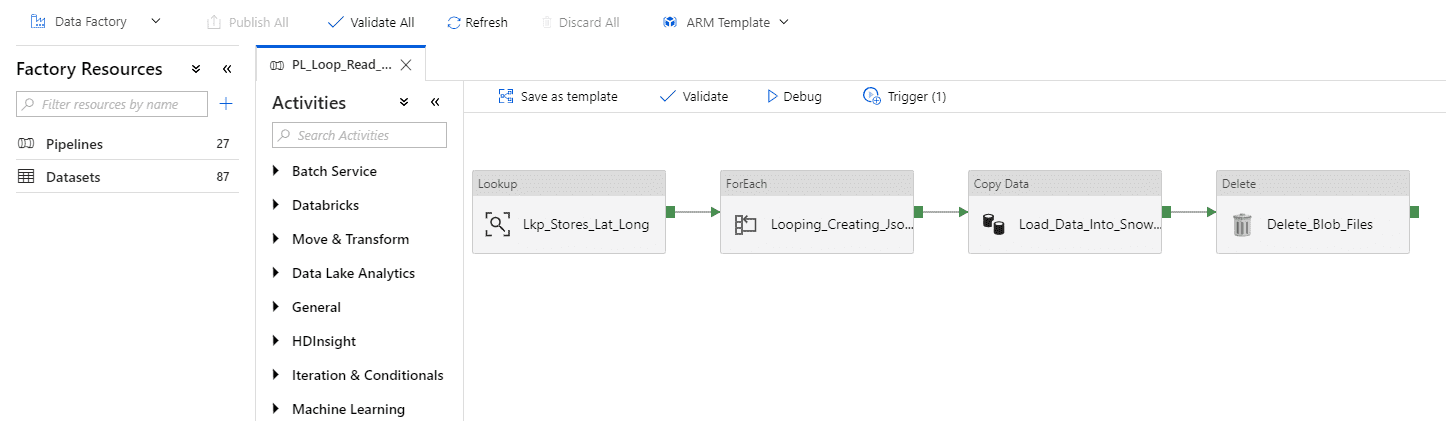

We created a pipeline in ADF to retrieve the data directly from the Dark Sky API in JSON format, bringing the files into BLOB storage, and loading them into the Snowflake tables. There was some additional logic required to parse the JSON file to read the hourly weather data.

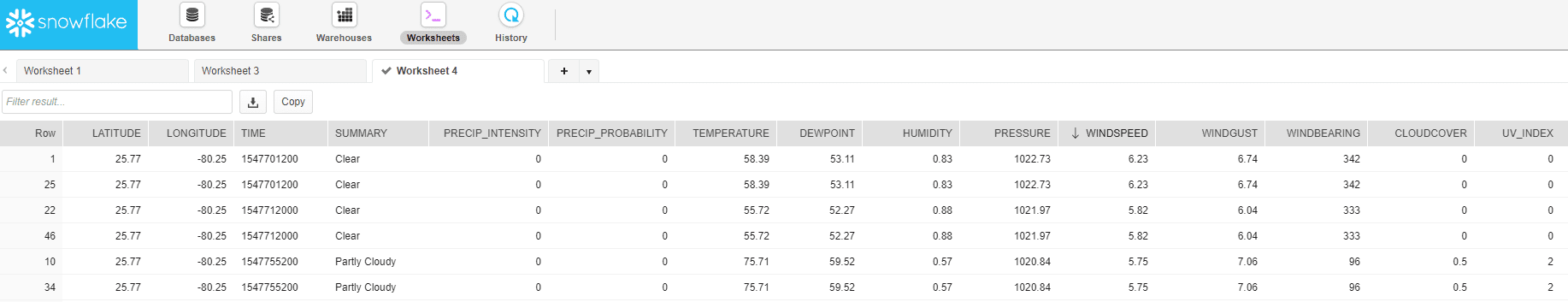

After the successful completion of the ADF pipeline process, data was available in Snowflake. The pipeline also included a cleanup task to delete the files from BLOB storage once they were loaded. Finally, views were created on the weather and sales tables to include date transformations on the epoch timestamp.

Analysis

To convert this data into actionable insights, we defined some strategies for analysis.

Level 1: Correlate sales and precipitation to help explain historical performance

Level 2: Combine different weather metrics for an ‘impact score’ (aka statistical covariance)

Level 3: Show the ‘tipping point’ for weather metrics that drive behavior, for example:

Level 4: Product-specific effects of weather, such as:

We have achieved the first two and continue to work through additional scenarios based on client feedback and requests.

Next Steps

The ADF pipeline process is scheduled to regularly load weather data for more locations and historical statistics that relate to sales data in our warehouse. We are setting up a sharing service in Snowflake to make this available as a service for our clients and partners. We look forward to updating the blog as we develop more features. For one thing, our Data Visualization team is anxious to play with this new data!

Learn more about our partnership with Snowflake

There’s more to explore at Smartbridge.com!

Sign up to be notified when we publish articles, news, videos and more!

Other ways to

follow us: