Using Azure ARM Templates for Data Factory Deployments

Azure Data Factory provides different options to handle deployments of various natures. One of which is using Azure ARM templates (or Azure Resource Management templates), which we’ll cover in this article.

Article originally published November 2020

Creating Azure ARM Templates

Azure Resource Management (ARM) templates serve to accommodate agile development methods within the Azure platform. Teams providing solutions are deploying to the cloud consistently, while trusting their infrastructure remains in a reliable state. To implement infrastructure as code for certain solutions, Azure ARM templates serve to eliminate the barriers between operations, infrastructure and development teams.

To meet these challenges, automating deployments are an option while using the practice of infrastructure as code. In code, the user defines the infrastructure that needs to be deployed, which then becomes part of your normal project. This is similar to application code in that you store the infrastructure code into a source repository. For this article specifically, we’ll be covering the step by step methods to deploying Azure ARM templates.

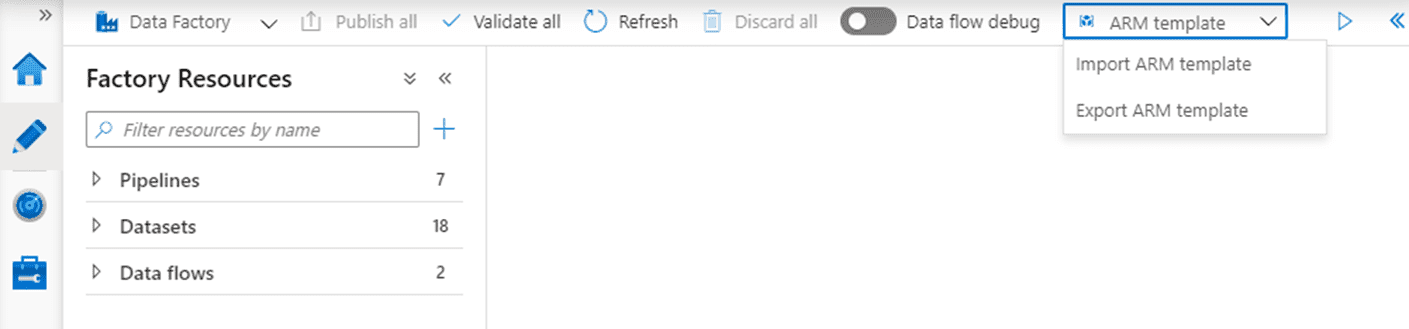

Step 1) Exporting the Template

This action downloads a zip file. The two main files within this folder are: arm_template.json and arm_template_parameters.json. The template file has the code for all objects within the Azure Data Factory. The parameters file allows you to provide the connection details like strings, users, and passwords depending on the data stores used. This file can be edited to avoid typing the parameters manually every time a deployment is done.

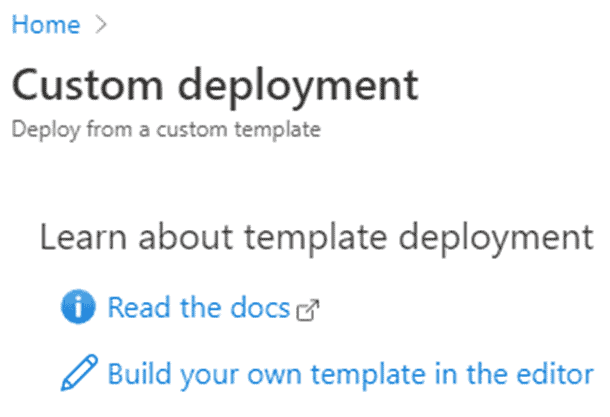

Step 2) Deploying the Template

Connect to the Data Factory where the template is going to be deployed, import the ARM template and select the option to “build your own template” in the editor.

Step 3) Loading the Template

Load the template and parameters JSON files to provide the connection details. An important consideration to keep in mind is that to finalize the deployment you have to click on the “purchase” button. This doesn’t mean that there is necessarily a cost every time you do a deployment but if you purchase services that are not free then yes you will start paying as soon as they are provisioned.

Deployment Considerations and Best Practices

These are a few considerations and best practices to follow for deployments:

Looking for more on Azure?

Explore more insights and expertise at smartbridge.com/data

Keep Reading: Introduction to Azure Data Factory Alerts

There’s more to explore at Smartbridge.com!

Sign up to be notified when we publish articles, news, videos and more!

Other ways to

follow us: