Production Performance Tuning with Azure Functions

Learn how Smartbridge approaches tuning production performance on applications that require large volumes of data to be processed as quickly as possible. We’ll specifically look at a real-life example using Microsoft Azure Functions.

Many modern business applications require real-time, or at least near real-time data. This data could be anything from sensor readings to purchase transactions. It all comes down to a large volume of data that needs to be processed as quickly as possible. Instead of keeping the transaction processing servers in house, increasingly, companies are turning to cloud Platform as a Service (PaaS) providers for the flexibility, scalability, and cost savings that they offer.

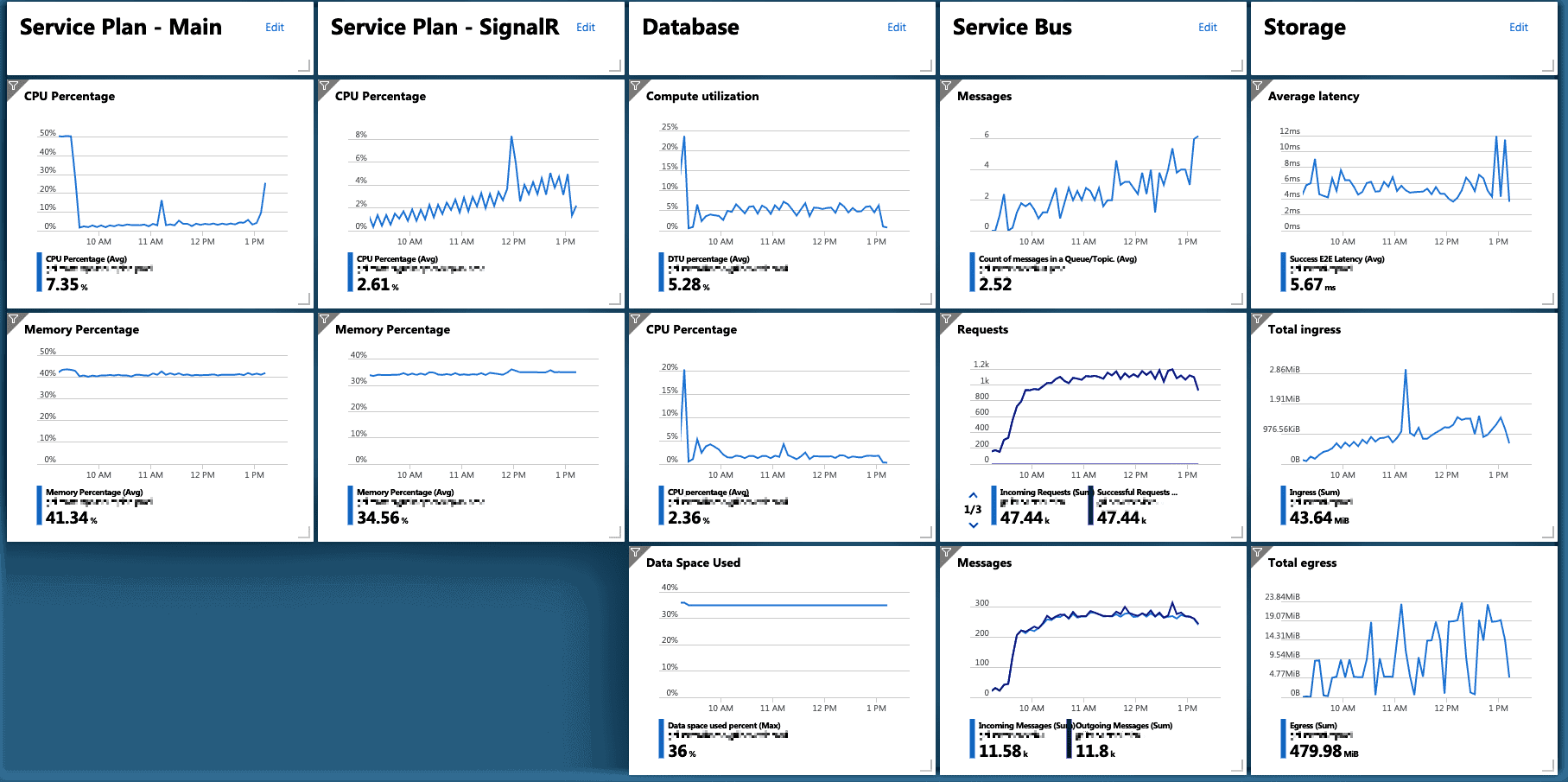

For example, we have an application in 200+ stores sending transactions throughout the day to an Azure App Service. An Azure App Service is an application that is always running on a specific server, waiting for web traffic, just like a typical web server. We started with five stores during testing, and everything worked beautifully. The server was easily keeping up with the transactions. Pleased with our success, we then deployed to forty stores. Now we see something happening! Using the monitoring tools built into Azure, we can view a wide range of service metrics at one time on our custom dashboard. In this case, we see that the processor maxed out, and messages are getting backed up in the queue as a result of the increased number of transactions. This means transactions are not getting processed on time. We had to fix this!

The Easy Fix?

Cloud-hosted services include the capability to scale “up” as well as scale “out”. Scaling-up or down increases or decreases the server capabilities by increasing or decreasing processing power, RAM, and throughput. Scaling-out adds instances of the application or service, and scaling-in removes instances of your application or service to handle a decrease in traffic. Scaling-out can be done on the fly and can even be automated based on any number of criteria.

So, we turned on automatic scaling and selected the criteria for scaling-out and scaling-in. Within minutes, additional instances of our application are spun up and guzzle down the transactions in the queue. With the load reduced, the server scales back down to a point where it can still handle the traffic.

Things are Looking Up?

Now that we have automatic scaling working for us, we get brave (and a little foolish) and bring on the rest of the stores. The firehose is wide open now and hits us square in the face, throwing us across the floor in a very cartoony fashion. It’s too much. Autoscaling kicks in but you quickly realize that it maxes out at 20 instances. It’s not keeping up. So, let’s go up! We scale the server up to the next service level. This increases the RAM, processor, and throughput of the machine. Things eventually return to normal, but now we are running a more expensive server and scaled-out to an uncomfortable count. It may not always keep up if there is a traffic spike. We had to look deeper.

Another Way of Thinking

We pay for these servers, their computing power, and their resources. But more important than the hardware we are running on, is what we are trying to accomplish. We have to think about the data first. We know we are getting transactions from 200 stores, but when do we get them? Looking at the design specifications, we can see that each store will send transactions every five minutes. We look at our dashboard and can confirm that our spikes of activity are at the same intervals. When the system was falling behind, processor use was constant. Now we can see drops in processing between intervals, so we are now paying a lot more money to have the processor sit there unused. This is not what we want.

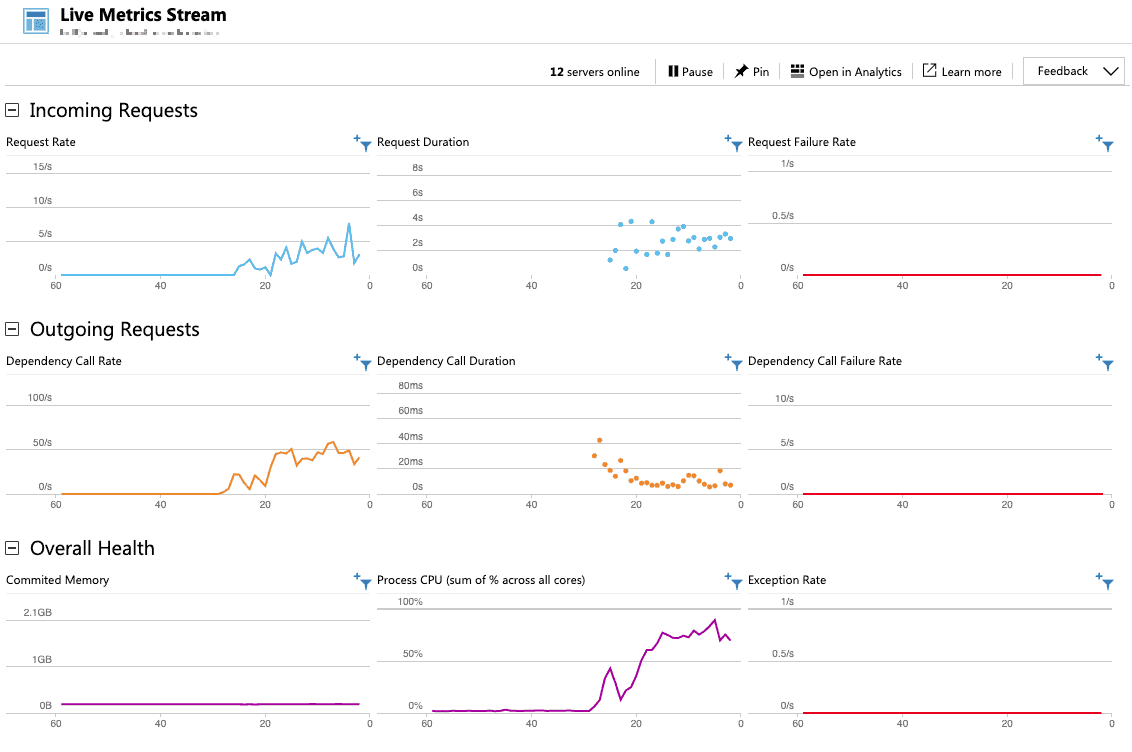

Inviting a Few Dozen Friends for a Drink

Azure and other cloud service providers also offer “Serverless Computing“. You can put a process out there and have it triggered to run. This means that it will do its work and shut down. It doesn’t exist on a specific server. It is just there when you need it, and you only pay for the time it spends executing your code. These functions can quickly scale up to 200 instances. So, after a little refactoring of our code we split the transaction processing logic out into Azure Serverless Functions. Now we can let the workload trigger the number of instances and we can scale the server back down to a lower pricing option. Watching this in action on the Function Live Metrics, we can see transactions coming in and function instances spinning up and completing the processing. We are now handling the transaction load from all stores and we are nowhere near the processing limits. We’ve also reduced our cost to a fraction of what we were in the process.

Design. Don’t Just Throw Darts.

When designing a system like this, you always want to start by understanding your data and processing requirements from the start. Knowing how the data will be coming in, how frequently, and how much processing is required will help guide you toward the best design. It is essential to load test at levels equal to and greater than the expected volume. The tools offered by Azure and other cloud services are beneficial when used correctly. Make sure you aren’t misusing them thinking it’s a quick fix for all your problems. If you make that mistake, you will be left completely drenched or, worse yet, left to drown.

Looking for more on Data Management?

Explore more insights and expertise at smartbridge.com/data

There’s more to explore at Smartbridge.com!

Sign up to be notified when we publish articles, news, videos and more!

Other ways to

follow us: