AI Governance Framework

As AI revolutionizes industries, creating a strong governance framework is essential for using AI ethically, securely, and efficiently, which helps manage risks, safeguard stakeholders, and build trust in AI systems.

In an era where artificial intelligence (AI) technology is rapidly transforming industries, establishing a robust AI governance framework is crucial for organizations aiming to harness its benefits responsibly. AI governance involves setting guidelines that ensure the ethical, secure, and efficient use of AI technologies. By adhering to a well-structured governance framework, organizations can mitigate risks, protect stakeholders’ interests, and enhance trust and reliability in their AI applications.

What is AI Governance and why should I care about it?

The primary role of AI governance is to guarantee that AI development and usage are secure, ethical, and responsible. It comprises a comprehensive system—including rules, processes, frameworks, and tools—designed to ensure that AI operations adhere to an organization’s core principles, comply with legal standards, and meet ethical and social expectations. As a crucial component of the organizational governance matrix, AI governance integrates with IT governance, data governance, and the corporate governance structures.

Why is an AI Governance Framework Important?

While powerful, AI technologies pose unique challenges related to privacy, security, ethical considerations, and more. A practical AI governance framework helps organizations navigate these challenges by providing clear guidelines on AI usage. It also ensures compliance with evolving regulations, protects intellectual property and privacy rights, and maintains public trust through transparency and accountability. Ultimately, a sound AI governance framework mitigates risks and enhances operational efficiency and innovation.

Four Pillars of AI Governance

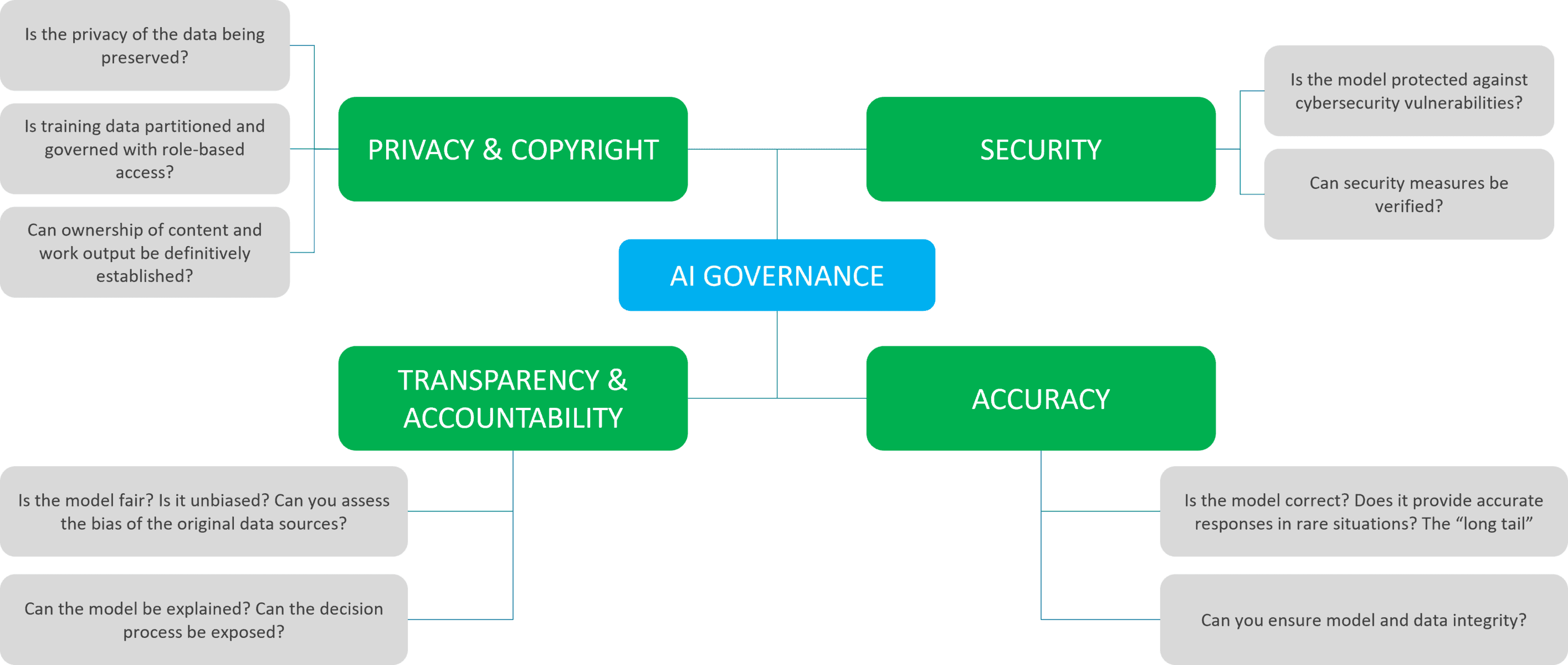

The foundation of a practical AI governance framework can be structured around four main elements: Privacy & Copyright, Security, Transparency & Accountability, and Accuracy.

Step to Get Started with AI Governance

Implementing an AI governance framework can seem daunting, but organizations can start with the following manageable steps:

1. Develop an AI Policy: Create a comprehensive AI guidelines that outline how AI should be used within the organization. This policy should address the ethical use of AI, data handling practices, compliance with legal standards, and methods for reporting and addressing AI-related issues.

View our GenAI Policy Template >>

2. Establish a Governance Committee: Set up a cross-functional team that includes members from IT, legal, compliance, and business units. This committee will oversee the implementation of AI policies, monitor compliance, and ensure that AI practices align with organizational values and legal requirements.

3. Conduct AI Awareness Training: Educate employees about the potential risks and benefits of AI technologies. Training should cover the organization’s AI policies, ethical considerations, and their roles in supporting AI governance.

4. Implement Monitoring Mechanisms: Regular audits and reviews of AI systems help ensure compliance with governance policies and the effectiveness of AI applications.

5. Engage with External Experts: Collaborate with legal advisors, industry experts, and technologists to stay updated on best practices and regulatory changes in AI governance. This external input can help refine governance strategies and ensure comprehensive oversight.

AI Governance is a Necessity

Implementing an AI governance framework is not just about compliance; it’s about building a foundation for sustainable and ethical AI usage that aligns with organizational goals and societal norms. By following these steps and integrating established guidelines from NIST, IEEE, and others, organizations can ensure that their AI initiatives are both innovative and responsible. This strategic approach not only mitigates risks but also enhances trust and reliability, paving the way for successful AI integration in business operations.

Are you looking for AI Advisory Services? Smartbridge senior experts are available to discuss your vision for AI. Book on our calendar here.

Keep Reading: Navigating Rising AI Costs: Strategies for Enterprises

Looking for more on AI?

Explore more insights and expertise at smartbridge.com/ai

There’s more to explore at Smartbridge.com!

Sign up to be notified when we publish articles, news, videos and more!

Other ways to

follow us: