Introduction to Azure Data Factory Alerts

Enabling Azure Data Factory alerts can allow organizations to closely monitor their systems so they can appropriately respond to any problems that may arise. We’ll discuss the different kinds of alerts and walk you through how we implemented them for one of our clients.

Article originally published March 2023

Operations require steady support to run smoothly and communicate both success and failure. Configuring alerts to monitor the system and parameters help organizations to respond and take the required actions based on critical events. Azure provides alerting capabilities for all their resources. In this article, we will be discussing how to enable alerts in Azure Data Factory using a quick and easy interface.

Main Types of Alerts

Metric Alerts

Metric alert rules are specified with a target criteria for a metric within the resource to be monitored. Based on the condition, notifications are sent to an action group when the alert rule fires. Here are a few more attributes of metric alerts:

Log Analytics Alerts

These alerts are triggered based on log searches that are automatically run at periodic internals. Advanced alerting for non-fatal errors, warnings, and business logic errors can be created in Azure Monitor and Log Analytics. A few more attributes of log analytics alerts are:

Azure Data Factory Alerts

At Smartbridge, we implemented native ADF Alerts as part of an Azure implementation for a client. We created two alert rules: one to monitor pipeline failures and the other for trigger failures. Below is an overview of the steps we took and how the alerts work.

Start by navigating to the Monitor tile in Data Factory and click “New Alert Rule”. Below are the parameters used to create an alert rule to monitor pipeline failures:

From the time of the rule being enabled, the system looks for any pipeline failure over the past 60 seconds then continues to check every minute. During this period if the count of pipeline failure is exceeding or equal to 1, the alert rule fires. Depending on the mode of notification configured, an Email/SMS/Voice call is sent to the action group notifying that the alert was activated.

If there is a consecutive pipeline failure during the evaluation window, the alert condition remains fired. If the pipeline execution is successful, the value becomes less than the threshold count. This condition should be satisfied for three healthy executions which will move the alert to the resolved condition. A notification will then be sent for Alert Deactivation.

The alert condition must change to resolved before further alerts can be triggered for that specified metric. The alert condition is controlled by the system whereas the state of the alert can be modified by the user.

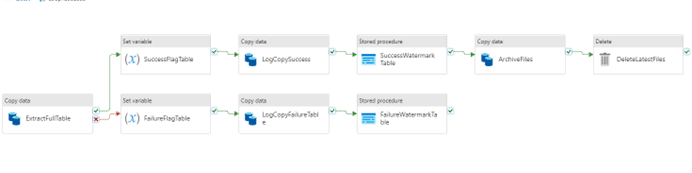

Custom Alerting in Azure Data Factory

Although Azure Monitor will provide reporting on failures, notifications might need to be customized to a particular process. Examples of this are the start and stop of a data warehouse load or a long-running fact table process. Pipelines can take hours and without custom notifications, staff or operations might not know of a failure.

At Smartbridge, pipelines are designed with notifications for start/success/failures. This can be configured for specific steps which might be long-running. All aspects are driven by the parameters of the pipeline and specific task execution. This is the standard for all pipelines at Smartbridge so development is similar and easy to resolve when failing.

Example of capturing custom data on a pipeline

Looking for more on Azure and Systems Modernization?

Explore more insights and expertise at smartbridge.com/modernization

Keep Reading: Best Practices in Azure Data Factory Version 2

There’s more to explore at Smartbridge.com!

Sign up to be notified when we publish articles, news, videos and more!

Other ways to

follow us: