LLMOps: Streamline Development and Boost Value

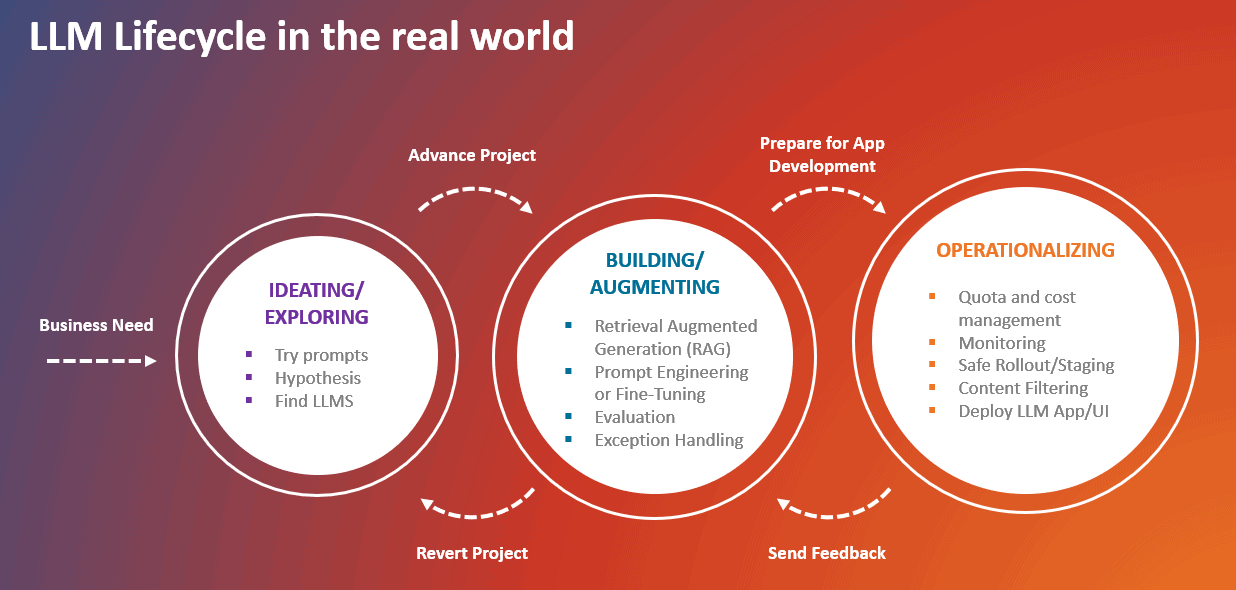

Manage and optimize the use of Large Language Models (LLMs) with LLMOps. We’ll walk through the 3 stages of using LLMOps: ideation, development, and deployment.

In today’s rapidly evolving technological landscape, it’s no secret that artificial intelligence (AI) is transforming businesses across various industries. The advent of large language models has further accelerated this transformation, enabling developers to build AI applications with unprecedented capabilities. However, harnessing the full power of these models can be challenging, requiring a new way of thinking and a comprehensive approach to AI app development.

Introducing LLMOps

Traditional machine learning operations (MLOps) may not suffice when it comes to developing applications powered by large language models (LLMs). These LLM-based applications present unique challenges due to their open-ended nature and the abundance of previously unseen data and questions. Consequently, developers must adopt a different mindset and utilize new building blocks to maximize the potential of LLMs.

The deployment of LLMs to production often involves a team of experts from various fields, including data science, user experience design, engineering, compliance or legal teams, and subject matter experts. As the system grows, the team needs to be ready to think through often complex questions about topics such as how to deal with the variance you might see in model output, or how best to tackle a safety issue. LLMOps helps with the operational management of these LLM applications. LLMOps consists of three stages: Ideation, Development, and Deployment.

Ideation: Exploring the Possibilities with Large Language Models

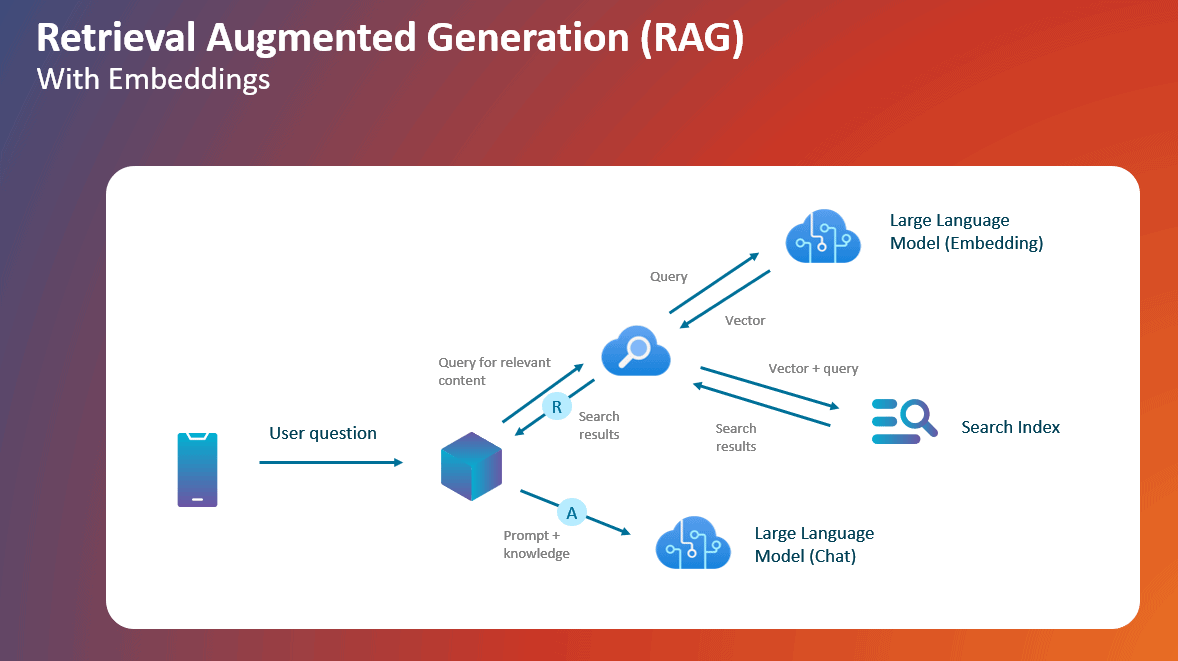

Once the business case is identified and refined, the first stage in LLMOps is ideation, where developers select a suitable large language model from a catalog of many models. This catalog includes models from OpenAI, open-source repositories, and specialized models like LLama. Once a model is chosen, developers embark on the process of prompt engineering to determine if their idea or hypothesis holds promise. This involves refining the prompts to ensure that the responses align with the questions being asked. In addition, selecting the desired user experience, the data needed to be pulled into the experience (e.g. through RAG-retrieval augmented generation), and answering the business questions about the impact are also part of this stage.

During the ideation stage, it is crucial to consider the data sources that can contribute to the development of a generative AI application. Developers must think about how to vectorize the data, determine the most appropriate chunking techniques, and even consider fine-tuning existing models in the catalog. However, the cornerstone of AI application development lies in ensuring high-quality output. Evaluation plays a vital role in assessing the performance of the application, and continuous evaluation is key to achieving success in generative AI.

Development: From Data Sources to Prompt Engineering

The second stage of LLMOps is development, where developers delve deeper into the AI application development process. This stage involves identifying relevant data sources, vectorizing the data, and fine-tuning prompts to elicit accurate and contextually appropriate responses. By leveraging vector databases, fine-tuning and prompt engineering techniques, developers can optimize the performance and relevance of the AI application.

Vector databases enable developers to store and retrieve vector representations of data, facilitating efficient processing and retrieval of information. By embedding questions and prompts into the vector space, developers can generate more accurate and coherent responses. Additionally, prompt engineering techniques allow for iterative refinement of prompts to improve the application’s performance over time.

Deployment: Operationalizing the AI Application

The final stage of LLMOps is deployment, where the focus shifts to operationalizing the AI application. This involves considerations such as application deployment, security, and monitoring. Traditional deployment practices, including continuous integration/continuous deployment (CI/CD) tools and enterprise security settings, remain essential in the LLMOps context.

To ensure the safety and appropriateness of generative AI applications, content safety measures are incorporated into the deployment process. These measures filter out inappropriate questions and responses, safeguarding the application’s integrity. Additionally, robust monitoring capabilities allow developers to track the performance of the application in real-world scenarios, identify areas for improvement, and iterate on prompt engineering to enhance the quality of responses.

Real-World Examples: Leveraging the Azure AI Platform

Microsoft, in collaboration with internal teams and external customers, has developed the Azure AI Platform to address the challenges of LLMOps and empower developers to build high-value generative AI applications. The platform includes a comprehensive model catalog, vector database connectivity, prompt engineering capabilities, content safety measures, and model monitoring. In addition, Azure Prompt flow provides the capabilities to develop an end-to-end generative AI app powered by the LLM of choice all within the secure Azure enterprise instance.

Siemens, one of the external companies leveraging the Azure AI Platform, has successfully built a Teamcenter application using Azure prompt flow features. The Teamcenter application allows employees from various locations to communicate in real time using natural language interfaces, greatly improving communication and collaboration within the organization.

Empowering Citizen Developers and Data Scientists with LLMOps Practices and Tools

End-to-end AI app development with Azure prompt flow (using LLMOps) offers exciting possibilities for developers to leverage the power of large language models. By adopting a comprehensive approach that encompasses ideation, development, and deployment, developers can build highly effective and contextually relevant AI applications. With the Azure AI Platform and tools like Prompt flow, developers can unlock the full potential of large language models and create innovative solutions that drive business value and enhance customer experiences.

By embracing LLMOps and leveraging the capabilities of prompt engineering, developers can navigate the challenges posed by open-ended problems, previously unseen data, and complex questions. Through continuous evaluation, iteration, and collaboration, developers can build AI applications that deliver accurate, contextually appropriate responses and drive meaningful outcomes for businesses and users alike.

Smartbridge has the AI knowledge and industry expertise to bring your digital agenda to life. Contact us to see how we can tailor a solution to fit your company’s needs.

Keep Reading: Demand Forecasting with Azure Machine Learning

Looking for more on AI?

Explore more insights and expertise at smartbridge.com/ai

There’s more to explore at Smartbridge.com!

Sign up to be notified when we publish articles, news, videos and more!

Other ways to

follow us: